Question 1

What is the name of the method that transforms categorical features into a series of binary indicator

feature variables?

- A. Leave-one-out encoding

- B. Target encoding

- C. One-hot encoding

- D. Categorical

- E. String indexing

Answer:

C

Explanation:

The method that transforms categorical features into a series of binary indicator variables is known

as one-hot encoding. This technique converts each categorical value into a new binary column, which

is essential for models that require numerical input. One-hot encoding is widely used because it

helps to handle categorical data without introducing a false ordinal relationship among categories.

Reference:

Feature Engineering Techniques (One-Hot Encoding).

Comments

Question 2

A data scientist wants to parallelize the training of trees in a gradient boosted tree to speed up the

training process. A colleague suggests that parallelizing a boosted tree algorithm can be difficult.

Which of the following describes why?

- A. Gradient boosting is not a linear algebra-based algorithm which is required for parallelization

- B. Gradient boosting requires access to all data at once which cannot happen during parallelization.

- C. Gradient boosting calculates gradients in evaluation metrics using all cores which prevents parallelization.

- D. Gradient boosting is an iterative algorithm that requires information from the previous iteration to perform the next step.

Answer:

D

Explanation:

Gradient boosting is fundamentally an iterative algorithm where each new tree is built based on the

errors of the previous ones. This sequential dependency makes it difficult to parallelize the training

of trees in gradient boosting, as each step relies on the results from the preceding step.

Parallelization in this context would undermine the core methodology of the algorithm, which

depends on sequentially improving the model's performance with each iteration.

Reference:

Machine Learning Algorithms (Challenges with Parallelizing Gradient Boosting).

Gradient boosting is an ensemble learning technique that builds models in a sequential manner. Each

new model corrects the errors made by the previous ones. This sequential dependency means that

each iteration requires the results of the previous iteration to make corrections. Here is a step-by-

step explanation of why this makes parallelization challenging:

Sequential Nature: Gradient boosting builds one tree at a time. Each tree is trained to correct the

residual errors of the previous trees. This requires the model to complete one iteration before

starting the next.

Dependence on Previous Iterations: The gradient calculation at each step depends on the predictions

made by the previous models. Therefore, the model must wait until the previous tree has been fully

trained and evaluated before starting to train the next tree.

Difficulty in Parallelization: Because of this dependency, it is challenging to parallelize the training

process. Unlike algorithms that process data independently in each step (e.g., random forests),

gradient boosting cannot easily distribute the work across multiple processors or cores for

simultaneous execution.

This iterative and dependent nature of the gradient boosting process makes it difficult to parallelize

effectively.

Reference

Gradient Boosting Machine Learning Algorithm

Understanding Gradient Boosting Machines

Comments

Question 3

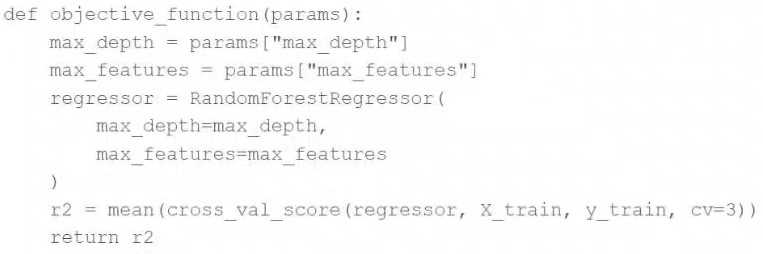

A data scientist wants to efficiently tune the hyperparameters of a scikit-learn model. They elect to

use the Hyperopt library's fmin operation to facilitate this process. Unfortunately, the final model is

not very accurate. The data scientist suspects that there is an issue with the objective_function being

passed as an argument to fmin.

They use the following code block to create the objective_function:

Which of the following changes does the data scientist need to make to their objective_function in

order to produce a more accurate model?

- A. Add test set validation process

- B. Add a random_state argument to the RandomForestRegressor operation

- C. Remove the mean operation that is wrapping the cross_val_score operation

- D. Replace the r2 return value with -r2

- E. Replace the fmin operation with the fmax operation

Answer:

D

Explanation:

When using the Hyperopt library with fmin, the goal is to find the minimum of the objective

function. Since you are using cross_val_score to calculate the R2 score which is a measure of the

proportion of the variance for a dependent variable that's explained by an independent variable(s) in

a regression model, higher values are better. However, fmin seeks to minimize the objective

function, so to align with fmin's goal, you should return the negative of the R2 score (-r2). This way,

by minimizing the negative R2, fmin is effectively maximizing the R2 score, which can lead to a more

accurate model.

Reference

Hyperopt Documentation: http://hyperopt.github.io/hyperopt/

Scikit-Learn documentation on model evaluation: https://scikit-learn.org/stable/modules/model_evaluation.html

Comments

Question 4

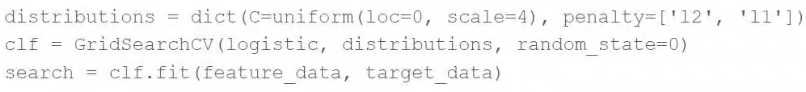

A data scientist is attempting to tune a logistic regression model logistic using scikit-learn. They want

to specify a search space for two hyperparameters and let the tuning process randomly select values

for each evaluation.

They attempt to run the following code block, but it does not accomplish the desired task:

Which of the following changes can the data scientist make to accomplish the task?

- A. Replace the GridSearchCV operation with RandomizedSearchCV

- B. Replace the GridSearchCV operation with cross_validate

- C. Replace the GridSearchCV operation with ParameterGrid

- D. Replace the random_state=0 argument with random_state=1

- E. Replace the penalty= ['12', '11'] argument with penalty=uniform ('12', '11')

Answer:

A

Explanation:

The user wants to specify a search space for hyperparameters and let the tuning process randomly

select values. GridSearchCV systematically tries every combination of the provided hyperparameter

values, which can be computationally expensive and time-consuming. RandomizedSearchCV, on the

other hand, samples hyperparameters from a distribution for a fixed number of iterations. This

approach is usually faster and still can find very good parameters, especially when the search space is

large or includes distributions.

Reference

Scikit-Learn documentation on hyperparameter tuning: https://scikit-learn.org/stable/modules/grid_search.html#randomized-parameter-optimization

Comments

Question 5

Which of the following tools can be used to parallelize the hyperparameter tuning process for single-

node machine learning models using a Spark cluster?

- A. MLflow Experiment Tracking

- B. Spark ML

- D. Autoscaling clusters

- E. Delta Lake

Answer:

B

Explanation:

Spark ML (part of Apache Spark's MLlib) is designed to handle machine learning tasks across multiple

nodes in a cluster, effectively parallelizing tasks like hyperparameter tuning. It supports various

machine learning algorithms that can be optimized over a Spark cluster, making it suitable for

parallelizing hyperparameter tuning for single-node machine learning models when they are

adapted to run on Spark.

Reference

Apache Spark MLlib Guide:

https://spark.apache.org/docs/latest/ml-guide.html

Spark ML is a library within Apache Spark designed for scalable machine learning. It provides tools to

handle large-scale machine learning tasks, including parallelizing the hyperparameter tuning process

for single-node machine learning models using a Spark cluster. Here’s a detailed explanation of how

Spark ML can be used:

Hyperparameter Tuning with CrossValidator: Spark ML includes the CrossValidator and

TrainValidationSplit classes, which are used for hyperparameter tuning. These classes can evaluate

multiple sets of hyperparameters in parallel using a Spark cluster.

from pyspark.ml.tuning import CrossValidator, ParamGridBuilder

from pyspark.ml.evaluation import BinaryClassificationEvaluator

# Define the model

model = ...

# Create a parameter grid

paramGrid = ParamGridBuilder() \

.addGrid(model.hyperparam1, [value1, value2]) \

.addGrid(model.hyperparam2, [value3, value4]) \

.build()

# Define the evaluator

evaluator = BinaryClassificationEvaluator()

# Define the CrossValidator

crossval = CrossValidator(estimator=model,

estimatorParamMaps=paramGrid,

evaluator=evaluator,

numFolds=3)

Parallel Execution: Spark distributes the tasks of training models with different hyperparameters

across the cluster’s nodes. Each node processes a subset of the parameter grid, which allows

multiple models to be trained simultaneously.

Scalability: Spark ML leverages the distributed computing capabilities of Spark. This allows for

efficient processing of large datasets and training of models across many nodes, which speeds up the

hyperparameter tuning process significantly compared to single-node computations.

Reference

Apache Spark MLlib Documentation

Hyperparameter Tuning in Spark ML

Comments

Question 6

Which of the following describes the relationship between native Spark DataFrames and pandas API

on Spark DataFrames?

- A. pandas API on Spark DataFrames are single-node versions of Spark DataFrames with additional metadata

- B. pandas API on Spark DataFrames are more performant than Spark DataFrames

- C. pandas API on Spark DataFrames are made up of Spark DataFrames and additional metadata

- D. pandas API on Spark DataFrames are less mutable versions of Spark DataFrames

- E. pandas API on Spark DataFrames are unrelated to Spark DataFrames

Answer:

C

Explanation:

Pandas API on Spark (previously known as Koalas) provides a pandas-like API on top of Apache Spark.

It allows users to perform pandas operations on large datasets using Spark's distributed compute

capabilities. Internally, it uses Spark DataFrames and adds metadata that facilitates handling

operations in a pandas-like manner, ensuring compatibility and leveraging Spark's performance and

scalability.

Reference

pandas API on Spark documentation:

https://spark.apache.org/docs/latest/api/python/user_guide/pandas_on_spark/index.html

Comments

Question 7

A data scientist has written a data cleaning notebook that utilizes the pandas library, but their

colleague has suggested that they refactor their notebook to scale with big data.

Which of the following approaches can the data scientist take to spend the least amount of time

refactoring their notebook to scale with big data?

- A. They can refactor their notebook to process the data in parallel.

- B. They can refactor their notebook to use the PySpark DataFrame API.

- C. They can refactor their notebook to use the Scala Dataset API.

- D. They can refactor their notebook to use Spark SQL.

- E. They can refactor their notebook to utilize the pandas API on Spark.

Answer:

E

Explanation:

The data scientist can refactor their notebook to utilize the pandas API on Spark (now known as

pandas on Spark, formerly Koalas). This allows for the least amount of changes to the existing

pandas-based code while scaling to handle big data using Spark's distributed computing capabilities.

pandas on Spark provides a similar API to pandas, making the transition smoother and faster

compared to completely rewriting the code to use PySpark DataFrame API, Scala Dataset API, or

Spark SQL.

Reference:

Databricks documentation on pandas API on Spark (formerly Koalas).

Comments

Question 8

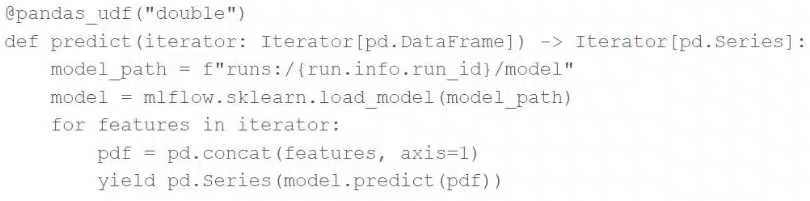

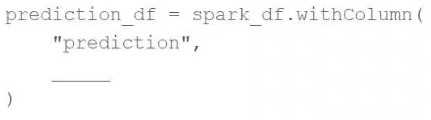

A data scientist has defined a Pandas UDF function predict to parallelize the inference process for a

single-node model:

They have written the following incomplete code block to use predict to score each record of Spark

DataFrame spark_df:

Which of the following lines of code can be used to complete the code block to successfully complete

the task?

- A. predict(*spark_df.columns)

- B. mapInPandas(predict)

- C. predict(Iterator(spark_df))

- D. mapInPandas(predict(spark_df.columns))

- E. predict(spark_df.columns)

Answer:

B

Explanation:

To apply the Pandas UDF predict to each record of a Spark DataFrame, you use the mapInPandas

method. This method allows the Pandas UDF to operate on partitions of the DataFrame as pandas

DataFrames, applying the specified function (predict in this case) to each partition. The correct code

completion to execute this is simply mapInPandas(predict), which specifies the UDF to use without

additional arguments or incorrect function calls.

Reference:

PySpark DataFrame documentation (Using mapInPandas with UDFs).

Comments

Question 9

Which of the Spark operations can be used to randomly split a Spark DataFrame into a training

DataFrame and a test DataFrame for downstream use?

- A. TrainValidationSplit

- B. DataFrame.where

- C. CrossValidator

- D. TrainValidationSplitModel

- E. DataFrame.randomSplit

Answer:

E

Explanation:

The correct method to randomly split a Spark DataFrame into training and test sets is by using the

randomSplit method. This method allows you to specify the proportions for the split as a list of

weights and returns multiple DataFrames according to those weights. This is directly intended for

splitting DataFrames randomly and is the appropriate choice for preparing data for training and

testing in machine learning workflows.

Reference:

Apache Spark DataFrame API documentation (DataFrame Operations: randomSplit).

Comments

Question 10

A data scientist is using Spark ML to engineer features for an exploratory machine learning project.

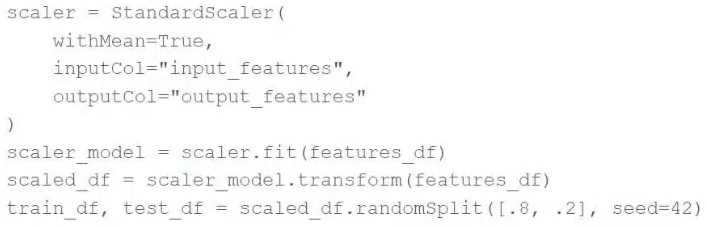

They decide they want to standardize their features using the following code block:

Upon code review, a colleague expressed concern with the features being standardized prior to

splitting the data into a training set and a test set.

Which of the following changes can the data scientist make to address the concern?

- A. Utilize the MinMaxScaler object to standardize the training data according to global minimum and maximum values

- B. Utilize the MinMaxScaler object to standardize the test data according to global minimum and maximum values

- C. Utilize a cross-validation process rather than a train-test split process to remove the need for standardizing data

- D. Utilize the Pipeline API to standardize the training data according to the test data's summary statistics

- E. Utilize the Pipeline API to standardize the test data according to the training data's summary statistics

Answer:

E

Explanation:

To address the concern about standardizing features prior to splitting the data, the correct approach

is to use the Pipeline API to ensure that only the training data's summary statistics are used to

standardize the test data. This is achieved by fitting the StandardScaler (or any scaler) on the training

data and then transforming both the training and test data using the fitted scaler. This approach

prevents information leakage from the test data into the model training process and ensures that the

model is evaluated fairly.

Reference:

Best Practices in Preprocessing in Spark ML (Handling Data Splits and Feature Standardization).

Comments

Page 1 out of 7

Viewing questions 1-10 out of 74

page 2