Question 1

A data engineer has left the organization. The data team needs to transfer ownership of the data

engineer’s Delta tables to a new data engineer. The new data engineer is the lead engineer on the

data team.

Assuming the original data engineer no longer has access, which of the following individuals must be

the one to transfer ownership of the Delta tables in Data Explorer?

- A. Databricks account representative

- B. This transfer is not possible

- C. Workspace administrator

- D. New lead data engineer

- E. Original data engineer

Answer:

C

Explanation:

The workspace administrator is the only individual who can transfer ownership of the Delta tables in

Data Explorer, assuming the original data engineer no longer has access. The workspace

administrator has the highest level of permissions in the workspace and can manage all resources,

users, and groups. The other options are either not possible or not sufficient to perform the

ownership transfer. The Databricks account representative is not involved in the workspace

management. The transfer is possible and not dependent on the original data engineer. The new lead

data engineer may not have the necessary permissions to access or modify the Delta tables, unless

granted by the workspace administrator or the original data engineer before

leaving. Reference:

Workspace access control

,

Manage Unity Catalog object ownership

.

Comments

Question 2

A data analyst has created a Delta table sales that is used by the entire data analysis team. They want

help from the data engineering team to implement a series of tests to ensure the data is clean.

However, the data engineering team uses Python for its tests rather than SQL.

Which of the following commands could the data engineering team use to access sales in PySpark?

- A. SELECT * FROM sales

- B. There is no way to share data between PySpark and SQL.

- C. spark.sql("sales")

- D. spark.delta.table("sales")

- E. spark.table("sales")

Answer:

E

Explanation:

The data engineering team can use the spark.table method to access the Delta table sales in PySpark.

This method returns a DataFrame representation of the Delta table, which can be used for further

processing or testing.

The spark.table method works for any table that is registered in the Hive

metastore or the Spark catalog, regardless of the file format1

.

Alternatively, the data engineering

team can also use the DeltaTable.forPath method to load the Delta table from its

path2. Reference: 1

:

SparkSession | PySpark 3.2.0 documentation 2

:

Welcome to Delta Lake’s Python

documentation page — delta-spark 2.4.0 documentation

Comments

Question 3

Which of the following commands will return the location of database customer360?

- A. DESCRIBE LOCATION customer360;

- B. DROP DATABASE customer360;

- C. DESCRIBE DATABASE customer360;

- D. ALTER DATABASE customer360 SET DBPROPERTIES ('location' = '/user'};

- E. USE DATABASE customer360;

Answer:

C

Explanation:

The command DESCRIBE DATABASE customer360; will return the location of the database

customer360, along with its comment and properties. This command is an alias for DESCRIBE

SCHEMA customer360;, which can also be used to get the same information.

The other commands

will either drop the database, alter its properties, or use it as the current database, but will not

return its location12

. Reference:

DESCRIBE DATABASE | Databricks on AWS

DESCRIBE DATABASE - Azure Databricks - Databricks SQL

Comments

Question 4

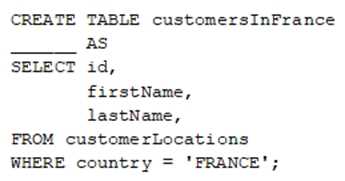

A data engineer wants to create a new table containing the names of customers that live in France.

They have written the following command:

A senior data engineer mentions that it is organization policy to include a table property indicating

that the new table includes personally identifiable information (PII).

Which of the following lines of code fills in the above blank to successfully complete the task?

- A. There is no way to indicate whether a table contains PII.

- B. "COMMENT PII"

- C. TBLPROPERTIES PII

- D. COMMENT "Contains PII"

- E. PII

Answer:

D

Explanation:

In Databricks, when creating a table, you can add a comment to columns or the entire table to

provide more information about the data it contains. In this case, since it’s organization policy to

indicate that the new table includes personally identifiable information (PII), option D is correct. The

line of code would be added after defining the table structure and before closing with a

semicolon. Reference:

Data Engineer Associate Exam Guide

,

CREATE TABLE USING (Databricks SQL)

Comments

Question 5

Which of the following benefits is provided by the array functions from Spark SQL?

- A. An ability to work with data in a variety of types at once

- B. An ability to work with data within certain partitions and windows

- C. An ability to work with time-related data in specified intervals

- D. An ability to work with complex, nested data ingested from JSON files

- E. An ability to work with an array of tables for procedural automation

Answer:

D

Explanation:

The array functions from Spark SQL are a subset of the collection functions that operate on array

columns1

.

They provide an ability to work with complex, nested data ingested from JSON files or

other sources2

.

For example, the explode function can be used to transform an array column into

multiple rows, one for each element in the array3

.

The array_contains function can be used to check

if a value is present in an array column4

. The array_join function can be used to concatenate all

elements of an array column with a delimiter.

These functions can be useful for processing JSON data

that may have nested arrays or objects. Reference: 1

:

Spark SQL, Built-in Functions - Apache

Spark 2

:

Spark SQL Array Functions Complete List - Spark By Examples 3

:

Spark SQL Array Functions -

Syntax and Examples - DWgeek.com 4

:

Spark SQL, Built-in Functions - Apache Spark

:

Spark SQL,

Built-in Functions - Apache Spark

: [Working with Nested Data Using Higher Order Functions in SQL

on Databricks - The Databricks Blog]

Comments

Question 6

Which of the following commands can be used to write data into a Delta table while avoiding the

writing of duplicate records?

- A. DROP

- B. IGNORE

- C. MERGE

- D. APPEND

- E. INSERT

Answer:

C

Explanation:

The MERGE command can be used to upsert data from a source table, view, or DataFrame into a

target Delta table. It allows you to specify conditions for matching and updating existing records, and

inserting new records when no match is found.

This way, you can avoid writing duplicate records into

a Delta table1

.

The other commands (DROP, IGNORE, APPEND, INSERT) do not have this functionality

and may result in duplicate records or data loss234. Reference: 1: Upsert into a Delta Lake table using

merge | Databricks on AWS 2: SQL DELETE | Databricks on AWS 3: SQL INSERT INTO | Databricks on

AWS 4

: SQL UPDATE | Databricks on AWS

Comments

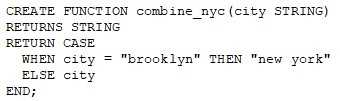

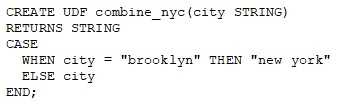

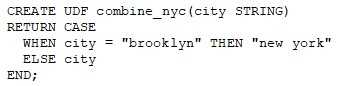

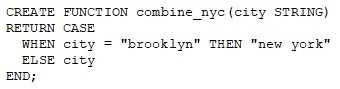

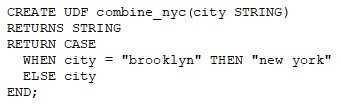

Question 7

A data engineer needs to apply custom logic to string column city in table stores for a specific use

case. In order to apply this custom logic at scale, the data engineer wants to create a SQL user-

defined function (UDF).

Which of the following code blocks creates this SQL UDF?

A.

B.

C.

D.

E.

- A. Option A

- B. Option B

- C. Option C

- D. Option D

Answer:

A

Explanation:

https://www.databricks.com/blog/2021/10/20/introducing-sql-user-defined-functions.html

Comments

Question 8

A data analyst has a series of queries in a SQL program. The data analyst wants this program to run

every day. They only want the final query in the program to run on Sundays. They ask for help from

the data engineering team to complete this task.

Which of the following approaches could be used by the data engineering team to complete this

task?

- A. They could submit a feature request with Databricks to add this functionality.

- B. They could wrap the queries using PySpark and use Python’s control flow system to determine when to run the final query.

- C. They could only run the entire program on Sundays.

- D. They could automatically restrict access to the source table in the final query so that it is only accessible on Sundays.

- E. They could redesign the data model to separate the data used in the final query into a new table.

Answer:

B

Explanation:

This approach would allow the data engineering team to use the existing SQL program and add some

logic to control the execution of the final query based on the day of the week. They could use

the datetime module in Python to get the current date and check if it is a Sunday. If so, they could

run the final query, otherwise they could skip it. This way, they could schedule the program to run

every day without changing the data model or the source table. Reference:

PySpark SQL

Module

,

Python datetime Module

,

Databricks Jobs

Comments

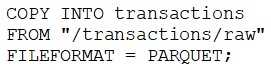

Question 9

A data engineer runs a statement every day to copy the previous day’s sales into the table

transactions. Each day’s sales are in their own file in the location "/transactions/raw".

Today, the data engineer runs the following command to complete this task:

After running the command today, the data engineer notices that the number of records in table

transactions has not changed.

Which of the following describes why the statement might not have copied any new records into the

table?

- A. The format of the files to be copied were not included with the FORMAT_OPTIONS keyword.

- B. The names of the files to be copied were not included with the FILES keyword.

- C. The previous day’s file has already been copied into the table.

- D. The PARQUET file format does not support COPY INTO.

- E. The COPY INTO statement requires the table to be refreshed to view the copied rows.

Answer:

C

Explanation:

The COPY INTO statement is an idempotent operation, which means that it will skip any files that

have already been loaded into the target table1

. This ensures that the data is not duplicated or

corrupted by multiple attempts to load the same file. Therefore, if the data engineer runs the same

command every day without specifying the names of the files to be copied with the FILES keyword or

a glob pattern with the PATTERN keyword, the statement will only copy the first file that matches the

source location and ignore the rest.

To avoid this problem, the data engineer should either use the

FILES or PATTERN keywords to filter the files to be copied based on the date or some other criteria,

or delete the files from the source location after they are copied into the table2. Reference: 1

:

COPY

INTO | Databricks on AWS 2

:

Get started using COPY INTO to load data | Databricks on AWS

Comments

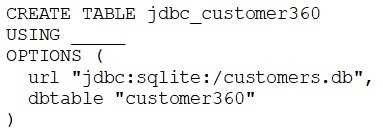

Question 10

A data engineer needs to create a table in Databricks using data from their organization’s existing

SQLite database.

They run the following command:

Which of the following lines of code fills in the above blank to successfully complete the task?

- A. org.apache.spark.sql.jdbc

- B. autoloader

- C. DELTA

- D. sqlite

- E. org.apache.spark.sql.sqlite

Answer:

D

Explanation:

: In the given command, a data engineer is trying to create a table in Databricks using data from an

SQLite database. The correct option to fill in the blank is “sqlite” because it specifies the type of

database being connected to in a JDBC connection string. The USING clause should be followed by

the format of the data, and since we are connecting to an SQLite database, “sqlite” would be

appropriate here. Reference:

Create a table using JDBC

JDBC connection string

SQLite JDBC driver

Comments

Page 1 out of 10

Viewing questions 1-10 out of 109

page 2