Question 1

[Security Architecture]

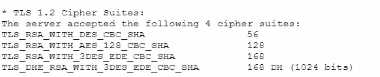

A vulnerability can on a web server identified the following:

Which of the following actions would most likely eliminate on path decryption attacks? (Select two).

- A. Disallowing cipher suites that use ephemeral modes of operation for key agreement

- B. Removing support for CBC-based key exchange and signing algorithms

- C. Adding TLS_ECDHE_ECDSA_WITH_AE3_256_GCMS_HA256

- D. Implementing HIPS rules to identify and block BEAST attack attempts

- E. Restricting cipher suites to only allow TLS_RSA_WITH_AES_128_CBC_SHA

- F. Increasing the key length to 256 for TLS_RSA_WITH_AES_128_CBC_SHA

Answer:

B,C

Explanation:

On-path decryption attacks, such as BEAST (Browser Exploit Against SSL/TLS) and other related

vulnerabilities, often exploit weaknesses in the implementation of CBC (Cipher Block Chaining)

mode. To mitigate these attacks, the following actions are recommended:

B . Removing support for CBC-based key exchange and signing algorithms: CBC mode is vulnerable to

certain attacks like BEAST. By removing support for CBC-based ciphers, you can eliminate one of the

primary vectors for these attacks. Instead, use modern cipher modes like GCM (Galois/Counter

Mode) which offer better security properties.

C . Adding TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA256: This cipher suite uses Elliptic Curve

Diffie-Hellman Ephemeral (ECDHE) for key exchange, which provides perfect forward secrecy. It also

uses AES in GCM mode, which is not susceptible to the same attacks as CBC. SHA-256 is a strong hash

function that ensures data integrity.

Reference:

CompTIA Security+ Study Guide

NIST SP 800-52 Rev. 2, "Guidelines for the Selection, Configuration, and Use of Transport Layer

Security (TLS) Implementations"

OWASP (Open Web Application Security Project) guidelines on cryptography and secure

communication

Comments

Question 2

[Security Operations]

The identity and access management team is sending logs to the SIEM for continuous monitoring.

The deployed log collector isforwarding logs to

the SIEM. However, only false positive alerts are being generated. Which of the following is the most

likely reason for the inaccurate alerts?

- A. The compute resources are insufficient to support the SIEM

- B. The SIEM indexes are 100 large

- C. The data is not being properly parsed

- D. The retention policy is not property configured

Answer:

C

Explanation:

Proper parsing of data is crucial for the SIEM to accurately interpret and analyze the logs being

forwarded by the log collector. If the data is not parsed correctly, the SIEM may misinterpret the logs,

leading to false positives and inaccurate alerts. Ensuring that the log data is correctly parsed allows

the SIEM to correlate and analyze the logs effectively, which is essential for accurate alerting and

monitoring.

Comments

Question 3

[Security Operations]

An incident response team is analyzing malware and observes the following:

• Does not execute in a sandbox

• No network loCs

• No publicly known hash match

• No process injection method detected

Which of thefollowing should the team do next to proceed with further analysis?

- A. Use an online vims analysis tool to analyze the sample

- B. Check for an anti-virtualization code in the sample

- C. Utilize a new deployed machine to run the sample.

- D. Search oilier internal sources for a new sample.

Answer:

B

Explanation:

Malware that does not execute in a sandbox environment often contains anti-analysis techniques,

such as anti-virtualization code. This code detects when the malware is running in a virtualized

environment and alters its behavior to avoid detection. Checking for anti-virtualization code is a

logical next step because:

It helps determine if the malware is designed to evade analysis tools.

Identifying such code can provide insights into themalware's behavior and intent.

This step can also inform further analysis methods, such as running the malware on physical

hardware.

Reference:

CompTIA Security+ Study Guide

SANS Institute, "Malware Analysis Techniques"

"Practical Malware Analysis" by Michael Sikorski and Andrew Honig

Comments

Question 4

[Governance, Risk, and Compliance (GRC)]

Which of the following best explains the importance of determining organization risk appetite when

operating with a constrained budget?

- A. Risk appetite directly impacts acceptance of high-impact low-likelihood events.

- B. Organizational risk appetite varies from organization to organization

- C. Budgetary pressure drives risk mitigation planning in all companies

- D. Risk appetite directly influences which breaches are disclosed publicly

Answer:

A

Explanation:

Risk appetite is the amount of risk an organization is willing to accept to achieve its objectives. When

operating with a constrained budget, understanding the organization's risk appetite is crucial

because:

It helps prioritize security investments based on the level of risk the organization is willing to

tolerate.

High-impact, low-likelihood events may be deemed acceptable if they fall within the organization's

risk appetite, allowing for budget allocation to other critical areas.

Properly understanding and defining risk appetite ensures that limited resources are used effectively

to manage risks that align with the organization's strategic goals.

Reference:

CompTIA Security+ Study Guide

NIST Risk Management Framework (RMF) guidelines

ISO 31000, "Risk Management – Guidelines"

Comments

Question 5

[Security Engineering and Cryptography]

Developers have been creating and managing cryptographic material on their personal laptops fix

use in production environment. A security engineer needs to initiate a more secure process. Which

of thefollowing is the best strategy for the engineer to use?

- A. Disabling the BIOS and moving to UEFI

- B. Managing secrets on the vTPM hardware

- C. Employing shielding lo prevent LMI

- D. Managing key material on a HSM

Answer:

D

Explanation:

The beststrategy for securely managing cryptographic material is to use a Hardware Security Module

(HSM). Here’s why:

Security and Integrity: HSMs are specialized hardware devices designed to protect and manage

digital keys. They provide high levels of physical and logical security, ensuring that cryptographic

material is well protected against tampering and unauthorized access.

Centralized Key Management: Using HSMs allows for centralized management of cryptographic keys,

reducing the risks associated with decentralized and potentially insecure key storage practices, such

as on personal laptops.

Compliance and Best Practices: HSMs comply with various industry standards and regulations (such

as FIPS 140-2) for secure key management. This ensures that the organization adheres to best

practices and meets compliance requirements.

Reference:

CompTIA Security+ SY0-601 Study Guide by Mike Chapple and David Seidl

NIST Special Publication 800-57: Recommendation for Key Management

ISO/IEC 19790:2012: Information Technology - Security Techniques - Security Requirements for

Cryptographic Modules

Comments

Question 6

[Security Architecture]

Users are willing passwords on paper because of the number of passwords needed in an

environment. Which of the following solutions is the best way to manage this situation and decrease

risks?

- A. Increasing password complexity to require 31 least 16 characters

- B. implementing an SSO solution and integrating with applications

- C. Requiring users to use an open-source password manager

- D. Implementing an MFA solution to avoid reliance only on passwords

Answer:

B

Explanation:

Implementing a Single Sign-On (SSO) solution and integrating it with applications is the best way to

manage the situation and decrease risks. Here’s why:

Reduced Password Fatigue: SSO allows users tolog in once and gain access to multiple applications

and systems without needing to remember and manage multiple passwords. This reduces the

likelihood of users writing down passwords.

Improved Security: By reducing the number of passwords users need to manage, SSO decreases the

attack surface and potential for password-related security breaches. It also allows for the

implementation of stronger authentication methods.

User Convenience: SSO improves the user experience by simplifying the login process, which can

lead to higher productivity and satisfaction.

Reference:

CompTIA Security+ SY0-601 Study Guide by Mike Chapple and David Seidl

NIST Special Publication 800-63B: Digital Identity Guidelines - Authentication and Lifecycle

Management

OWASP Authentication Cheat Sheet

Comments

Question 7

[Governance, Risk, and Compliance (GRC)]

The material finding from a recent compliance audit indicate a company has an issue with excessive

permissions. The findings show that employees changing roles or departments results in privilege

creep. Which of the following solutions are the best ways to mitigate this issue? (Select two).

Setting different access controls defined by business area

- A. Implementing a role-based access policy

- B. Designing a least-needed privilege policy

- C. Establishing a mandatory vacation policy

- D. Performing periodic access reviews

- E. Requiring periodic job rotation

Answer:

A,D

Explanation:

To mitigate the issue of excessive permissions and privilege creep, the best solutions are:

Implementing a Role-Based Access Policy:

Role-Based Access Control (RBAC): This policy ensures that access permissions are granted based on

the user's role within the organization, aligning with the principle of least privilege. Users are only

granted access necessary for their role, reducing the risk of excessive permissions.

Reference:

CompTIA Security+ SY0-601 Study Guide by Mike Chapple and David Seidl

NIST Special Publication 800-53: Security and Privacy Controls for Information Systems and

Organizations

Performing Periodic Access Reviews:

RegularAudits: Periodic access reviews help identify and rectify instances of privilege creep by

ensuring that users' access permissions are appropriate for their current roles. These reviews can

highlight unnecessary or outdated permissions, allowing for timely adjustments.

Reference:

CompTIA Security+ SY0-601 Study Guide by Mike Chapple and David Seidl

ISO/IEC 27001:2013 - Information Security Management

Comments

Question 8

[Security Architecture]

A security architect is establishing requirements to design resilience in un enterprise system trial will

be extended to other physical locations. The system must

• Be survivable to one environmental catastrophe

• Re recoverable within 24 hours of critical loss of availability

• Be resilient to active exploitation of one site-to-site VPN solution

- A. Load-balance connection attempts and data Ingress at internet gateways

- B. Allocate fully redundant and geographically distributed standby sites.

- C. Employ layering of routers from diverse vendors

- D. Lease space to establish cold sites throughout other countries

- E. Use orchestration to procure, provision, and transfer application workloads lo cloud services

- F. Implement full weekly backups to be stored off-site for each of the company's sites

Answer:

B

Explanation:

To design resilience in an enterprise system that can survive environmental catastrophes, recover

within 24 hours, and be resilient to active exploitation, the best strategy is to allocate fully redundant

and geographically distributed standby sites. Here’s why:

Geographical Redundancy: Having geographically distributed standby sites ensures that if one site is

affected by an environmental catastrophe, the other sites can take over, providing continuity of

operations.

Full Redundancy: Fully redundant sites mean that all critical systems and data are replicated,

enabling quick recovery in the event of a critical loss of availability.

Resilience to Exploitation: Distributing resources across multiple sites reduces the risk of a single

point of failure and increases resilience against targeted attacks.

Reference:

CompTIA Security+ SY0-601 Study Guide by Mike Chapple and David Seidl

NIST Special Publication 800-34: Contingency Planning Guide for Federal Information Systems

ISO/IEC 27031:2011 - Guidelines for Information andCommunication Technology Readiness for

Business Continuity

Comments

Question 9

[Emerging Technologies and Threats]

Users must accept the terms presented in a captive petal when connecting to a guest network.

Recently, users have reported that they are unable to access the Internet after joining the network A

network engineer observes the following:

• Users should be redirected to the captive portal.

• The Motive portal runs Tl. S 1 2

• Newer browser versions encounter security errors that cannot be bypassed

• Certain websites cause unexpected re directs

Which of the following mow likely explains this behavior?

- A. The TLS ciphers supported by the captive portal ate deprecated

- B. Employment of the HSTS setting is proliferating rapidly.

- C. Allowed traffic rules are causing the NIPS to drop legitimate traffic

- D. An attacker is redirecting supplicants to an evil twin WLAN.

Answer:

A

Explanation:

The most likely explanation for the issues encountered with the captive portal is that the TLS ciphers

supported by the captive portal are deprecated. Here’s why:

TLS Cipher Suites: Modern browsers are continuously updated to support the latest security

standards and often drop support for deprecated and insecure cipher suites. If the captive portal uses

outdated TLS ciphers, newer browsers may refuse to connect, causing security errors.

HSTS and Browser Security: Browsers with HTTP Strict Transport Security (HSTS) enabled will not

allow connections to sites with weak security configurations. Deprecated TLS ciphers would cause

these browsers to block the connection.

Reference:

CompTIA Security+ SY0-601 Study Guide by Mike Chapple and David Seidl

NIST Special Publication 800-52: Guidelines for the Selection, Configuration, and Use of Transport

Layer Security (TLS) Implementations

OWASP Transport LayerProtection Cheat Sheet

By updating the TLS ciphers to modern, supported ones, the security engineer can ensure

compatibility with newer browser versions and resolve the connectivity issues reported by users.

Comments

Question 10

[Security Architecture]

A security configure isbuilding a solution to disable weak CBC configuration for remote access

connections lo Linux systems. Which of the following should the security engineer modify?

- A. The /etc/openssl.conf file, updating the virtual site parameter

- B. The /etc/nsswith.conf file, updating the name server

- C. The /etc/hosts file, updating the IP parameter

- D. The /etc/etc/sshd, configure file updating the ciphers

Answer:

D

Explanation:

The sshd_config file is the main configuration file for the OpenSSH server. To disable weak CBC

(Cipher Block Chaining) ciphers for SSH connections, the security engineer should modify the

sshd_config file to update the list of allowed ciphers. This file typically contains settings for the SSH

daemon, including which encryption algorithms are allowed.

By editing the /etc/ssh/sshd_config file and updating the Ciphers directive, weak ciphers can be

removed, and only strong ciphers can be allowed. This change ensures that the SSH server does not

use insecure encryption methods.

Reference:

CompTIA Security+ Study Guide

OpenSSH manual pages (man sshd_config)

CIS Benchmarks for Linux

Comments

Page 1 out of 28

Viewing questions 1-10 out of 290

page 2