amazon AWS Certified Database - Specialty practice test

Last exam update: Jul 20 ,2024

Question 1

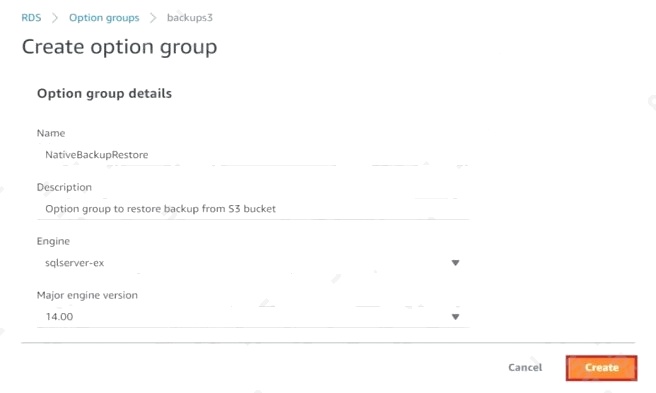

A company has an on-premises production Microsoft SQL Server with 250 GB of data in one database. A database

specialist needs to migrate this on-premises SQL Server to Amazon RDS for SQL Server. The nightly native SQL Server

backup file is approximately 120 GB in size. The application can be down for an extended period of time to complete the

migration. Connectivity between the onpremises environment and AWS can be initiated from on-premises only.

How can the database be migrated from on-premises to Amazon RDS with the LEAST amount of effort?

- A. Back up the SQL Server database using a native SQL Server backup. Upload the backup files to Amazon S3. Download the backup files on an Amazon EC2 instance and restore them from the EC2 instance into the new production RDS instance.

- B. Back up the SQL Server database using a native SQL Server backup. Upload the backup files to Amazon S3. Restore the backup files from the S3 bucket into the new production RDS instance.

- C. Provision and configure AWS DMS. Set up replication between the on-premises SQL Server environment to replicate the database to the new production RDS instance.

- D. Back up the SQL Server database using AWS Backup. Once the backup is complete, restore the completed backup to an Amazon EC2 instance and move it to the new production RDS instance.

Answer:

B

Explanation:

Reference: https://www.sqlshack.com/aws-rds-sql-server-migration-using-native-backups/

Question 2

A Database Specialist is setting up a new Amazon Aurora DB cluster with one primary instance and three Aurora Replicas

for a highly intensive, business-critical application. The Aurora DB cluster has one medium-sized primary instance, one

large-sized replica, and two medium sized replicas. The Database Specialist did not assign a promotion tier to the replicas.

In the event of a primary failure, what will occur?

- A. Aurora will promote an Aurora Replica that is of the same size as the primary instance

- B. Aurora will promote an arbitrary Aurora Replica

- C. Aurora will promote the largest-sized Aurora Replica

- D. Aurora will not promote an Aurora Replica

Answer:

A

Explanation:

Reference: https://docs.aws.amazon.com/AmazonRDS/latest/AuroraUserGuide/aurora-ug.pdf

Question 3

A company is going through a security audit. The audit team has identified cleartext master user password in the AWS

CloudFormation templates for Amazon RDS for MySQL DB instances. The audit team has flagged this as a security risk to

the database team.

What should a database specialist do to mitigate this risk?

- A. Change all the databases to use AWS IAM for authentication and remove all the cleartext passwords in CloudFormation templates.

- B. Use an AWS Secrets Manager resource to generate a random password and reference the secret in the CloudFormation template.

- C. Remove the passwords from the CloudFormation templates so Amazon RDS prompts for the password when the database is being created.

- D. Remove the passwords from the CloudFormation template and store them in a separate file. Replace the passwords by running CloudFormation using a sed command.

Answer:

C

Question 4

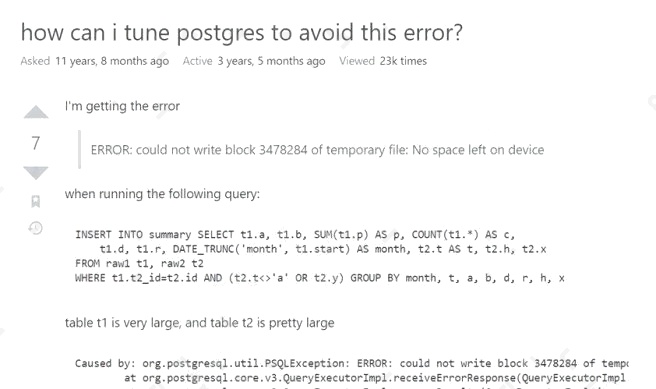

A Database Specialist is performing a proof of concept with Amazon Aurora using a small instance to confirm a simple

database behavior. When loading a large dataset and creating the index, the Database Specialist encounters the following

error message from Aurora:

ERROR: cloud not write block 7507718 of temporary file: No space left on device

What is the cause of this error and what should the Database Specialist do to resolve this issue?

- A. The scaling of Aurora storage cannot catch up with the data loading. The Database Specialist needs to modify the workload to load the data slowly.

- B. The scaling of Aurora storage cannot catch up with the data loading. The Database Specialist needs to enable Aurora storage scaling.

- C. The local storage used to store temporary tables is full. The Database Specialist needs to scale up the instance.

- D. The local storage used to store temporary tables is full. The Database Specialist needs to enable local storage scaling.

Answer:

C

Explanation:

Reference: https://serverfault.com/questions/109828/how-can-i-tune-postgres-to-avoid-this-error

Question 5

A database specialist wants to ensure that an Amazon Aurora DB cluster is always automatically upgraded to the most

recent minor version available. Noticing that there is a new minor version available, the database specialist has issues an

AWS CLI command to enable automatic minor version updates. The command runs successfully, but checking the Aurora

DB cluster indicates that no update to the Aurora version has been made.

What might account for this? (Choose two.)

- A. The new minor version has not yet been designated as preferred and requires a manual upgrade.

- B. Configuring automatic upgrades using the AWS CLI is not supported. This must be enabled expressly using the AWS Management Console.

- C. Applying minor version upgrades requires sufficient free space.

- D. The AWS CLI command did not include an apply-immediately parameter.

- E. Aurora has detected a breaking change in the new minor version and has automatically rejected the upgrade.

Answer:

C D

Question 6

A company is going to use an Amazon Aurora PostgreSQL DB cluster for an application backend. The DB cluster contains

some tables with sensitive data. A Database Specialist needs to control the access privileges at the table level.

How can the Database Specialist meet these requirements?

- A. Use AWS IAM database authentication and restrict access to the tables using an IAM policy.

- B. Configure the rules in a NACL to restrict outbound traffic from the Aurora DB cluster.

- C. Execute GRANT and REVOKE commands that restrict access to the tables containing sensitive data.

- D. Define access privileges to the tables containing sensitive data in the pg_hba.conf file.

Answer:

C

Explanation:

Reference: https://aws.amazon.com/blogs/database/managing-postgresql-users-and-roles/

Question 7

A company uses an Amazon RDS for PostgreSQL DB instance for its customer relationship management (CRM) system.

New compliance requirements specify that the database must be encrypted at rest.

Which action will meet these requirements?

- A. Create an encrypted copy of manual snapshot of the DB instance. Restore a new DB instance from the encrypted snapshot.

- B. Modify the DB instance and enable encryption.

- C. Restore a DB instance from the most recent automated snapshot and enable encryption.

- D. Create an encrypted read replica of the DB instance. Promote the read replica to a standalone instance.

Answer:

C

Explanation:

Reference: https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/Overview.Encryption.html

Question 8

An ecommerce company uses Amazon DynamoDB as the backend for its payments system. A new regulation requires the

company to log all data access requests for financial audits. For this purpose, the company plans to use AWS logging and

save logs to Amazon S3 How can a database specialist activate logging on the database?

- A. Use AWS CloudTrail to monitor DynamoDB control-plane operations. Create a DynamoDB stream to monitor data-plane operations. Pass the stream to Amazon Kinesis Data Streams. Use that stream as a source for Amazon Kinesis Data Firehose to store the data in an Amazon S3 bucket.

- B. Use AWS CloudTrail to monitor DynamoDB data-plane operations. Create a DynamoDB stream to monitor control-plane operations. Pass the stream to Amazon Kinesis Data Streams. Use that stream as a source for Amazon Kinesis Data Firehose to store the data in an Amazon S3 bucket.

- C. Create two trails in AWS CloudTrail. Use Trail1 to monitor DynamoDB control-plane operations. Use Trail2 to monitor DynamoDB data-plane operations.

- D. Use AWS CloudTrail to monitor DynamoDB data-plane and control-plane operations.

Answer:

D

Explanation:

Reference: https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/best-practices-security-detective.html

Question 9

The Development team recently executed a database script containing several data definition language (DDL) and data

manipulation language (DML) statements on an Amazon Aurora MySQL DB cluster. The release accidentally deleted

thousands of rows from an important table and broke some application functionality. This was discovered 4 hours after the

release. Upon investigation, a Database Specialist tracked the issue to a DELETE command in the script with an incorrect

WHERE clause filtering the wrong set of rows.

The Aurora DB cluster has Backtrack enabled with an 8-hour backtrack window. The Database Administrator also took a

manual snapshot of the DB cluster before the release started. The database needs to be returned to the correct state as

quickly as possible to resume full application functionality. Data loss must be minimal. How can the Database Specialist

accomplish this?

- A. Quickly rewind the DB cluster to a point in time before the release using Backtrack.

- B. Perform a point-in-time recovery (PITR) of the DB cluster to a time before the release and copy the deleted rows from the restored database to the original database.

- C. Restore the DB cluster using the manual backup snapshot created before the release and change the application configuration settings to point to the new DB cluster.

- D. Create a clone of the DB cluster with Backtrack enabled. Rewind the cloned cluster to a point in time before the release. Copy deleted rows from the clone to the original database.

Answer:

D

Question 10

A company has an on-premises system that tracks various database operations that occur over the lifetime of a database,

including database shutdown, deletion, creation, and backup.

The company recently moved two databases to Amazon RDS and is looking at a solution that would satisfy these

requirements. The data could be used by other systems within the company.

Which solution will meet these requirements with minimal effort?

- A. Create an Amazon CloudWatch Events rule with the operations that need to be tracked on Amazon RDS. Create an AWS Lambda function to act on these rules and write the output to the tracking systems.

- B. Create an AWS Lambda function to trigger on AWS CloudTrail API calls. Filter on specific RDS API calls and write the output to the tracking systems.

- C. Create RDS event subscriptions. Have the tracking systems subscribe to specific RDS event system notifications.

- D. Write RDS logs to Amazon Kinesis Data Firehose. Create an AWS Lambda function to act on these rules and write the output to the tracking systems.

Answer:

C

Question 11

An ecommerce company recently migrated one of its SQL Server databases to an Amazon RDS for SQL Server Enterprise

Edition DB instance. The company expects a spike in read traffic due to an upcoming sale. A database specialist must

create a read replica of the DB instance to serve the anticipated read traffic.

Which actions should the database specialist take before creating the read replica? (Choose two.)

- A. Identify a potential downtime window and stop the application calls to the source DB instance.

- B. Ensure that automatic backups are enabled for the source DB instance.

- C. Ensure that the source DB instance is a Multi-AZ deployment with Always ON Availability Groups.

- D. Ensure that the source DB instance is a Multi-AZ deployment with SQL Server Database Mirroring (DBM).

- E. Modify the read replica parameter group setting and set the value to 1.

Answer:

B D

Question 12

The Security team for a finance company was notified of an internal security breach that happened 3 weeks ago. A

Database Specialist must start producing audit logs out of the production Amazon Aurora PostgreSQL cluster for the

Security team to use for monitoring and alerting. The Security team is required to perform real-time alerting and monitoring

outside the Aurora DB cluster and wants to have the cluster push encrypted files to the chosen solution. Which approach will

meet these requirements?

- A. Use pg_audit to generate audit logs and send the logs to the Security team.

- B. Use AWS CloudTrail to audit the DB cluster and the Security team will get data from Amazon S3.

- C. Set up database activity streams and connect the data stream from Amazon Kinesis to consumer applications.

- D. Turn on verbose logging and set up a schedule for the logs to be dumped out for the Security team.

Answer:

B

Explanation:

Reference: https://docs.aws.amazon.com/AmazonRDS/latest/AuroraUserGuide/aurora-ug.pdf (525)

Question 13

A company uses Amazon Aurora MySQL as the primary database engine for many of its applications. A database specialist

must create a dashboard to provide the company with information about user connections to databases. According to

compliance requirements, the company must retain all connection logs for at least 7 years.

Which solution will meet these requirements MOST cost-effectively?

- A. Enable advanced auditing on the Aurora cluster to log CONNECT events. Export audit logs from Amazon CloudWatch to Amazon S3 by using an AWS Lambda function that is invoked by an Amazon EventBridge (Amazon CloudWatch Events) scheduled event. Build a dashboard by using Amazon QuickSight.

- B. Capture connection attempts to the Aurora cluster with AWS Cloud Trail by using the DescribeEvents API operation. Create a CloudTrail trail to export connection logs to Amazon S3. Build a dashboard by using Amazon QuickSight.

- C. Start a database activity stream for the Aurora cluster. Push the activity records to an Amazon Kinesis data stream. Build a dynamic dashboard by using AWS Lambda.

- D. Publish the DatabaseConnections metric for the Aurora DB instances to Amazon CloudWatch. Build a dashboard by using CloudWatch dashboards.

Answer:

C

Explanation:

Reference: https://docs.aws.amazon.com/AmazonRDS/latest/AuroraUserGuide/DBActivityStreams.html

Question 14

A database specialist has a fleet of Amazon RDS DB instances that use the default DB parameter group. The database

specialist needs to associate a custom parameter group with some of the DB instances.

After the database specialist makes this change, when will the instances be assigned to this new parameter group?

- A. Instantaneously after the change is made to the parameter group

- B. In the next scheduled maintenance window of the DB instances

- C. After the DB instances are manually rebooted

- D. Within 24 hours after the change is made to the parameter group

Answer:

C

Explanation:

To apply the latest parameter changes to that DB instance, manually reboot the DB instance.

Reference: https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/USER_WorkingWithParamGroups.html

Question 15

A Database Specialist is designing a disaster recovery strategy for a production Amazon DynamoDB table. The table uses

provisioned read/write capacity mode, global secondary indexes, and time to live (TTL). The Database Specialist has

restored the latest backup to a new table.

To prepare the new table with identical settings, which steps should be performed? (Choose two.)

- A. Re-create global secondary indexes in the new table

- B. Define IAM policies for access to the new table

- C. Define the TTL settings

- D. Encrypt the table from the AWS Management Console or use the update-table command

- E. Set the provisioned read and write capacity

Answer:

A E

Explanation:

Reference:

https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/HowItWorks.ReadWriteCapacityMode.html